Opening Big JSON Files: Challenges and the Solution

JSON in the modern computer era

JSON is a human-readable and self-describing format, which emphasizes readability with an exhibiting syntax. JSON is used to serialize and encapsulate data, it supports hierarchical structures, simplifying the storage of related data in a single document and presenting complex relationships.

It is favored and covers most of the transmissions on the web. It is the most commonly used format to extract, store, and interchange data between computers. Because it is lightweight, compact, and text-based parsing and therefore using it is straightforward.

JSON is language-independent, so it’s valuable for data exchange between heterogeneous systems. Most programming languages offer simplified built-in support for JSON serialization/deserialization.

JSON file size can and would extend

JSON is becoming the new CSV because it’s becoming a widespread data format. For instance, one of the most significant shifts in application design over the past decade was a move to REST APIs with payloads formatted in JSON. This shift made JSON the chosen format for most of the web transitions.

JSON use cases are so extensive, database dumps and exports are among the most common ones. It is a widely used file format for NoSQL databases such as MongoDB, Couchbase, and Azure Cosmos DB. Most of these databases have JSON as an export option too.

Modern web-based tools and systems like GraphQL that rely on fully typed data, use JSON language too. While working with API calls in web-based solutions, instead of laboring with rigid server-defined endpoints, you can send queries to get precisely the data you are looking for in one request, thanks to JSON structured schema.

Another important application is logging system usage in JSON format. Each software or system, major or smallish, has a log system to trace its conduct. Log systems are everywhere and many of them use JSON as their format, like Amazon CloudWatch Logs.

Logging is useful for retaining, debugging, analyzing, and scanning while a system is functioning. The problem with log files is that text data is unstructured. As a result, it’s difficult to filter and put a query on the log files for information. The goal of JSON logging is to solve these problems and many others. There are many ideas and methods for storing each log entry and JSON format is the best, and the most common one. JSON logging is a kind of structured logging, meaning you’ll have your log data parsed and stored in a structured JSON format.

In this approach, each entry of the Log result would become a JSON object, and they would be appended to each other which would make up a JSON file consisting of multiple JSON objects. We have published a thorough post about this topic, read more about it here.

Logging a system’s manners is important, and since log systems generate logs at each moment, they can extend easily. It is important to be able to work with a log result, which has grown in size.

There is no hard limit defined by JSON specification, so there is practically no limit on the maximum size of a JSON file. It can be as long as the space is demanded by the contents to be stored. So, in conclusion, with these modern tools, it is noncontroversial to assume that there would be large JSON files.

Here come the real challenges in working with BIG JSON files

When a user needs to open a 50MB — let alone bigger sizes like gigabytes — JSON file, text editors are the most common tools available. Eventually, the user would see a frozen, not responding window or worse, an error indicating you exceeded the size limit.

Most of the text editors would crash and cannot open the file. In some cases, the tool would only load a restricted size from the start of the file. In a nutshell, they cannot open a big JSON file.

This could be because most tools for opening JSON files, such as text editors, were not designed with large JSON files in mind. After trying to open a big JSON file, you face some known and practical challenges in working with them. For example, it’s easy to run out of memory, so managing resources is one of the biggest issues. The user would run out of memory before even being able to see the raw data.

Dealing with big JSON files

Now that we expressed the reasons and consequences, it is time for the solution. One of the best approaches in parsing big JSON files is to implement a smart parsing algorithm for virtualizing the trace process of every node and data field. For the sake of speed and optimization in resource usage, this is important.

That’s exactly how we handled gigabyte visualization of JSON data files in our implementation. One of the most important bottlenecks in this process is to be able to visit each node once and then jump ahead and find another one without dropping performance in the process.

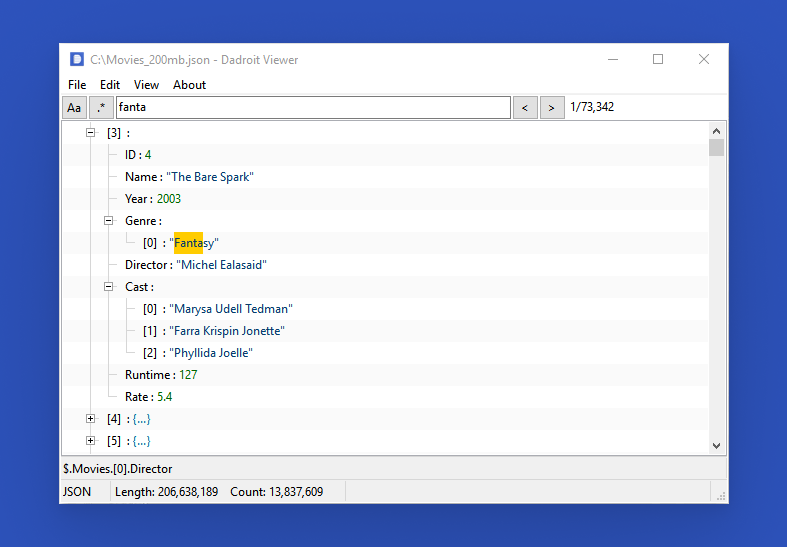

One of the biggest objectives we had in making Dadroit JSON Viewer and Dadroit V Web was the capability to open big JSON files smoothly. Since there was no such tool for opening, processing, and working with them properly. To fulfill these goals in JSON Viewer, we had to optimize resource usage and be less IO-intensive.

After nailing all these points, when you work with a big JSON file, it would be so beneficial to be able to work with its nodes, objects, and fields like a data field in a data management tool, not plain text. With Dadroit, it is effortless to have a better vision while working with each node and field in a big JSON.

Another good thing Dadroit provides for working with a big JSON file is illustrating the structure. The tree view structure, Dadroit JSON Viewer comes with, distinguishes presenting data types like arrays and objects. Which would come in handy in big JSON files with vast fields or large object nodes.

The cherry on the top

Features which we’ll express are important in both experienced and customary work with JSON data files, especially with rising in size criteria. For getting your desired value from your data, the search experience is important. If the JSON file is not formatted, it would get even trickier to spot and reach the value you are looking for.

Dadroit JSON Viewer provides a comprehensive search experience regarding these necessities. The instant and complete search experience in large JSON files is necessary, and Dadroit delivers it smoothly. The RegEx for finding data in a specific pattern is another complementary feature. Say you needed a date format search, or three-digit numbers only, Dadroit JSON Viewer gives you these search results specifically.

There is also an export option in the Dadroit JSON Viewer, which is useful for exporting a portion or whole data in a big JSON file. The minified export option is useful for optimized space usage in big JSON files, as minified data.

Time to compare Dadroit JSON Viewer with other options

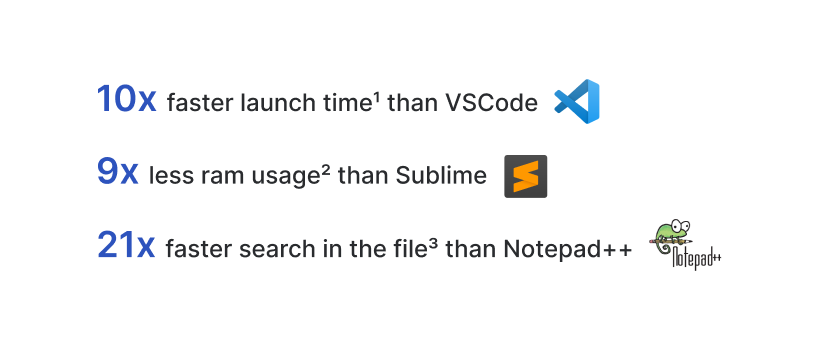

When searching for a tool to open a big JSON file, the most common options are text editors, browsers, and some other tools. Experimenting with these tools with a big JSON file demonstrates they do not function sufficiently in scaling to large sizes. In these cases, Dadroit JSON Viewer comes in and achieves it all.

While opening a big JSON file in these tools, even if the raw data fits into memory, the representation can raise memory usage much more. This is mostly because of lacking the maturity in JSON parsing and indexing the data. Which causes either slow processing (as your program swaps to disk) or crashing when systems run out of memory.

Search experience in text editors or any other tool in a big file is not sufficient at all, and it is mostly because of poor handling of big-size data. Dadroit JSON Viewer executes effective parsing and indexing algorithms in opening a big JSON file. Then uses these mature indexes to achieve instant search as well.

Last words

At the beginning of this article, we concluded that JSON is the most common format to exchange data between computers. Then we brought out why are there huge JSON files and why it is important to be able to open and view and work with them. It is being used in so many areas, and these modern tools generate big JSON files which need a proper tool to work with them.

Then we arrived at the main problem in working with huge JSON files, we mentioned that opening big JSON files has numerous challenges, which is mostly why there is no suitable tool for it. This can affect performance and abuse resource usage in the application — for example text editors — in a way that would mainly fail in opening big JSON files at the end.

Finally, we arrived at the pick of our article to introduce the solution. We built Dadroit JSON Viewer having all these needs and challenges in mind, and delivered results powerful in the matter of opening big JSON files. Dadroit JSON Viewer is the only tool available to have all these options and capabilities overall, for working with a big JSON file.

To wrap it up, available tools to open JSON files cannot scale and function properly when the JSON file grows in size. Dadroit JSON Viewer beats all the other solutions in performance and resource usage, and it will give you an adequate set of tools to work with, too.

Check it out and let us know if you want to know more. It is free and available for download for Mac, Windows, and Linux right now, you can check it out here.

Footnotes

-

Time to open 200MB JSON file with Dadroit vs Microsoft VSCode. This test is conducted using machine with Intel(R) Core(TM) i3-7100 CPU @ 3.91 GHz Processor, 8Gb RAM and 64bit Operating System. ↩

-

Memory usage to open 20MB JSON file with Dadroit vs Sublime. This test is conducted using machine with Intel(R) Core(TM) i3-7100 CPU @ 3.91 GHz Processor, 8Gb RAM and 64bit Operating System. ↩

-

Search result per second in 2MB JSON file with Dadroit vs Notepad++. This test is conducted using machine with Intel(R) Core(TM) i3-7100 CPU @ 3.91 GHz Processor, 8Gb RAM and 64bit Operating System. ↩